In the R&D project Connected Drone 2, eSmart Systems has, together with the 22 Norwegian utilities in the project, taken advantage of the power of digital twins to develop deep learning algorithms for semi-autonomous drone navigation.

Improving efficiency and quality of drone power line inspections

Many Norwegian utilities use helicopters to perform their mandatory and regular inspections of the grid. However, using drones for line and pole inspection is a simple, versatile, environmentally friendly and potentially low-cost alternative. Therefore, the utilities in the project have pinpointed two major use cases for drone inspections in this project, namely ad hoc outage inspection (fault finding) and planned top and line inspections.

To improve efficiency and standardize the quality of the output of these drone missions, automation is needed. Manual drone operations are most common among the utilities in the project at present and the introduction of automation by using deep learning and image recognition has the potential to increase the degree of automation significantly.

Many drones are equipped with RTK GNSS technology (e.g. DJI Matrice 210 RTK), which allow centimeter precision in positioning and highly accurate waypoint mission plans. However, using only waypoints has many drawbacks. First, the location of the masts is often unknown and/or inaccurate. Moreover, in order to capture images at constant distances and angles from the towers and powerline (for image analytics), the drone needs additional ways of understanding its position relative to the inspected subjects.

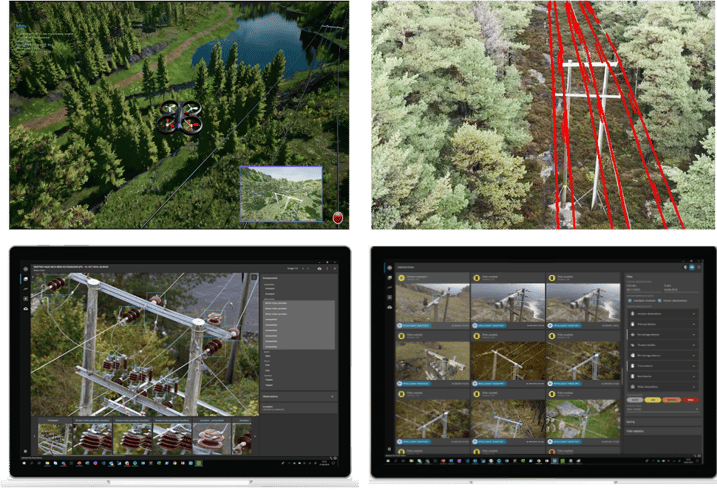

This is a gap that eSmart’s AI and image recognition experience can fill. The drone perception AI, based on our core AI in object detections, enable drones to recognize lines and poles and its relative position to these subjects.

The result is that inspection images can be captured at the same distance and same angle every time, improving the AI capabilities of eSmart’ s software Connected Drone. In addition, we deploy our autonomous software on a drone companion computer to avoid connection loss or bandwidth limitation situations (edge computing).

Figure 1: Illustration of process, from autonomous data collection in the digital twin, transfer of learning from the digital twin to real life using edge processing to cloud processing of images collected in the Connected Drone software for fault detection.

Automatic or semi- autonomous navigation of drones over power lines are often high-risk operations. Developing and testing algorithms for autonomous vehicles in real world is an expensive and time-consuming process. In order to utilize recent advances in machine intelligence and deep learning we need to collect a large amount of annotated (ground truth) training data in a variety of conditions and environments. This can only be feasibly achieved with a digital twin.

In a digital twin we can collect synthetic data and test hypothesis, without risk of causing serious damage to equipment and infrastructure.

How we created the digital twin

In cooperation with Nordic Media Lab we developed a highly realistic simulation environment (digital twin).

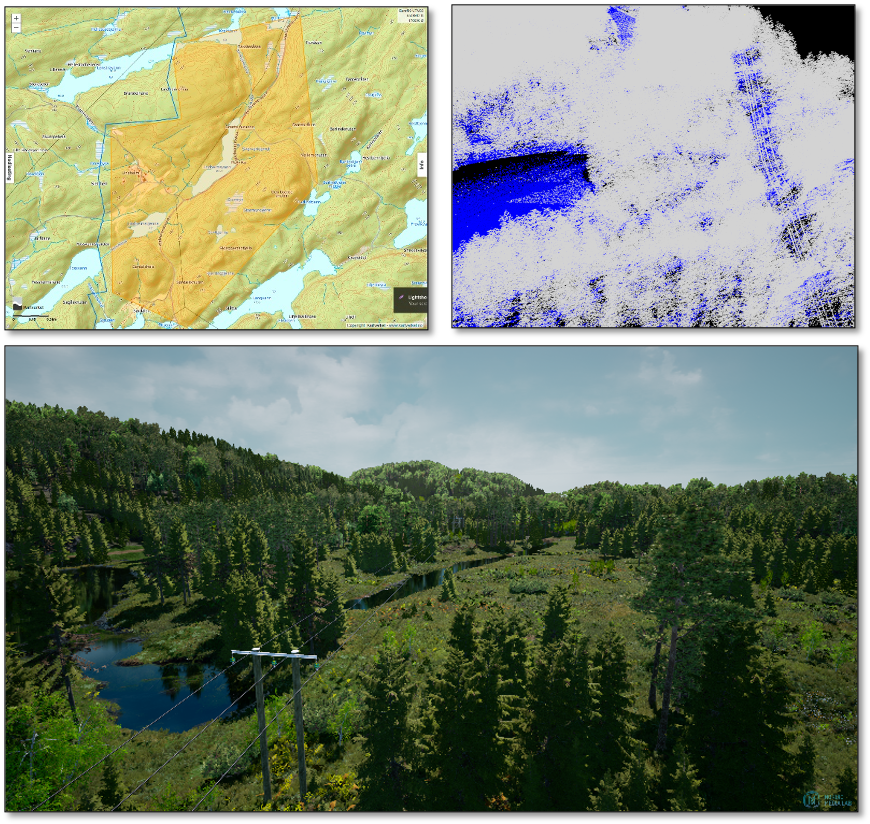

The environment used for simulation training is a digital twin from the location Lindheimsvann, Kragerø, Norway. It was built on publicly available elevation data. The data consists of a lidar dataset (point cloud), which was used to produce a digital terrain map (DTM). The DTM was then converted to a 3D mesh in Unreal Engine 4. RGB images from the area were used to manually confirm and position environmental assets like trees, bushes and rocks that are close to the powerlines. Important infrastructure like mast ́s, houses, roads etc. are validated using GPS locations.

When all assets were in place, verification was performed using the original point cloud. The point cloud was also used to determine the correct arch of the powerline cables.

In order to simulate falling trees in the environment, research had to be done on physics, on how trees would fall and how lines would bend or possibly break during an impact. Several tree types were modelled to realistically create the necessary variation in the training material.

To simulate drones within the digital twin, Microsoft Air Sim, an open source simulator for autonomous vehicles was integrated. Air Sim provides a platform for AI research to experiment with deep learning, computer vision and reinforcement learning algorithms for autonomous vehicles. AirSim also exposes APIs to retrieve data and control vehicles in a platform independent way.

Figure 2: From 2d map, to lidar 3d point cloud to digital twin. The digital twin of the Lindheimsvann area.

The advantages of the digital twin

The simulated drones can collect data in the simulation environment in a very efficient manner using as many cameras as needed in a flight. Also, the simulation environment provides the possibility to retrieve the ground truth about drone telemetry and the position of all components in the environment which is important for building deep learning algorithms.

The digital twin allows for an unlimited amount of trial and error. The weather conditions can easily be changed, the environment itself can easily be changed and components can easily be changed in order to create the necessary variation in the training material.

Last but not least, the digital twin can be connected to its real counterpart. Navigation algorithms developed can be tested on a real drone (hardware in the loop), reducing the risks of failures when taking the step from simulation environment to the real environment.

How do we make use of the digital twin?

By utilizing a digital twin simulation environment, we can focus on building the drone perception module. The perception part has two responsibilities:

- Localize the drone’s position in 3D space

- Detect and locate objects (mast, tree, powerlines) of the environment for the navigation/inspection task.

The drone localization is normally offered by the simulator and the drone provider. Therefore, we focus on developing the vision-based objects detection and localization algorithms.

- Use case 1: Outage Inspection

In order to train a drone to recognize the single most common cause of outages, namely trees or vegetation, we needed the opportunity to simulate falling trees. Nordic Media Lab created 3 types of trees, birch, pine and spruce, and generated the physics that was needed to realistically simulate a tree that has fallen over the powerline.

By randomly generating trees falling in the simulation’s environment, we can collect data that is usually very sparse (often these events are not documented by images) and build models that can run onboard and notify the drone about documenting this event more carefully. The images captured by the drone can then be sent back to the operators and into the Connected Drone software, where the point of interest detected by the drone can be visualized in a map.

Figure 3: Typical examples of trees falling on power lines and possibly causing outages. Early detection can be simplified by using drones that can recognize a tree over the line using image recognition algorithms. The major obstacle for developing such algorithms is the lack of real training data from drones. Using a simulation environment can possibly enhance the dataset to such an extent that the algorithms will also work in real-life situations.

Figure 3: Typical examples of trees falling on power lines and possibly causing outages. Early detection can be simplified by using drones that can recognize a tree over the line using image recognition algorithms. The major obstacle for developing such algorithms is the lack of real training data from drones. Using a simulation environment can possibly enhance the dataset to such an extent that the algorithms will also work in real-life situations.

- Use case 2: Line inspection and top inspection

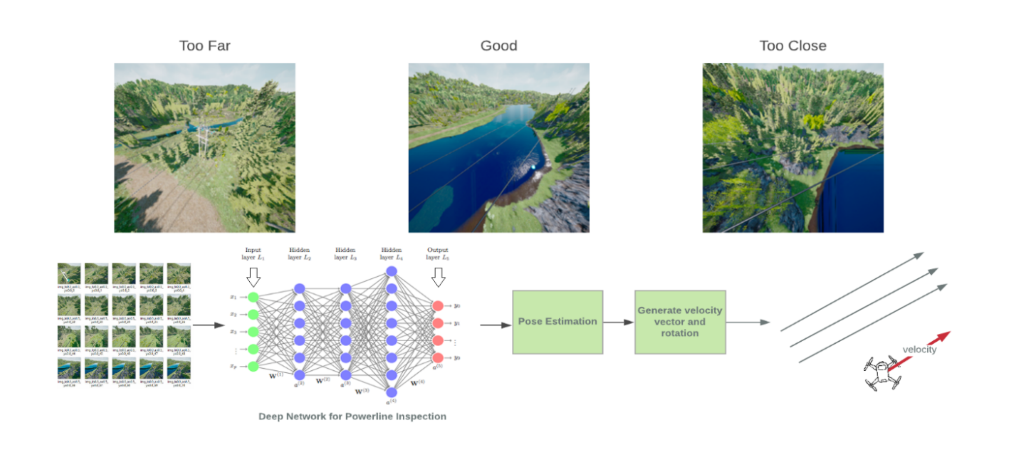

Basically, in order to fly autonomously along the powerline or around the pole, the drone should be able to estimate its position relative to the powerline or pole. Therefore, we collected a large dataset of image taken from various positions/orientations of the drone relative to the powerline. Then we trained a lightweight deep learning model on this dataset to help drone estimate its position, for example, whether it is good, too far, or too close from the line (as seen on Figure 4). Based on this perception, the drone can adjust its direction/velocity accordingly (keep direction, flying closer, or flying away). Although the general idea is quite simple, the implementation is complicated due to the complexity of 3D space, drone controller model, and safety requirements.

Figure 4: Illustration of line navigation algorithms. The drone is trained to recognize its position respect to the powerline and adjust accordingly if it is good, too close or too far.

Figure 4: Illustration of line navigation algorithms. The drone is trained to recognize its position respect to the powerline and adjust accordingly if it is good, too close or too far.

What is next? From simulation to real-world

Using the digital twin as described above is imperative for the success of realizing drone missions in real environments. We have proven that we can autonomously navigate a drone in the digital twin simulation environment around poles and along power lines. In addition, the drone is also able to detect if there are trees falling over the powerline on its way.

To bring autonomous drones to real life for power line inspections, there are still many challenges to overcome. One of the biggest challenges is how to bridge the gap between synthetic data and real data. The amount of data required by deep learning is huge, but it is difficult, expensive and time-consuming to collect labelled data in the real environment. Thanks to digital twins, we can now collect as many images as required and train several models rapidly. However, can models trained in a synthetic environment work in real life? Can we at least reduce the number of examples from real life and only tune the algorithms using real examples? We have tried several techniques (domain randomization, mixed datasets etc) and the results are promising. We expect to perform the first field tests in the near future.

.png?width=250&height=64&name=Grid%20Vision%20logo(250%20x%2064%20px).png)