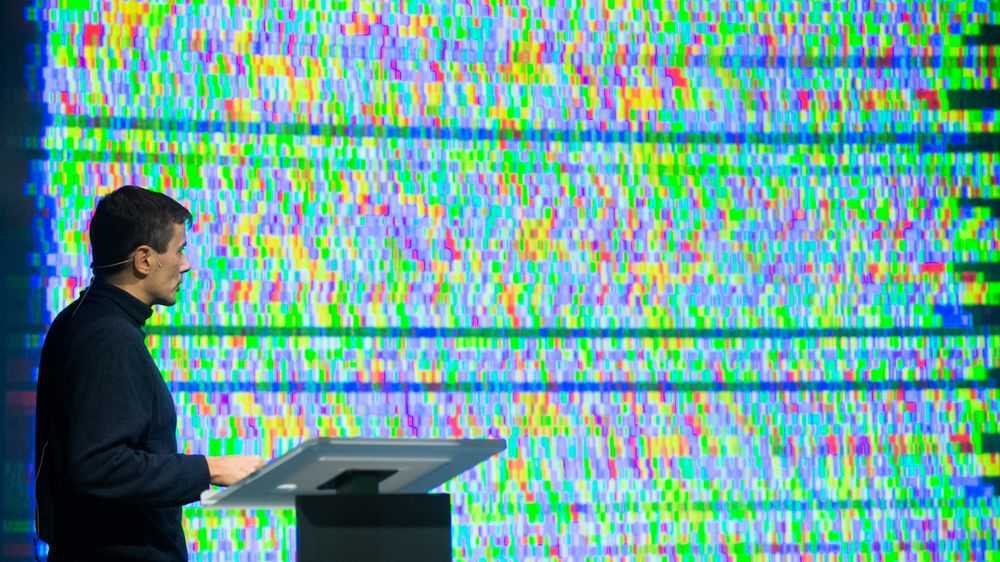

The energy and utility industry is continuously facing challenges, and with the current rapid pace of technology, Artificial Intelligence (AI) is undoubtedly an important asset in the future. In this video, Chief Analytics Officer at eSmart Systems, Davide Roverso, walks us through everything from how AI is revolutionizing chess to how deep neural network technology enables automated inspection of power grid assets.

The energy and utility industry is continuously facing challenges, and with the current rapid pace of technology, Artificial Intelligence (AI) is undoubtedly an important asset in the future. In this video, Chief Analytics Officer at eSmart Systems, Davide Roverso, walks us through everything from how AI is revolutionizing chess to how deep neural network technology enables automated inspection of power grid assets.

First, let's go into a few examples of AI used in the game of chess. In 1997 a watershed moment occurred; for the first time in history a computer was able to outperform, not just a human, but the arguably “best available human” in a purely intellectual endeavor, namely the game of chess. It was the historic match between IBM’s Deep Blue and the legendary chess champion Garry Kasparov.

After that famous defeat, Kasparov continued to improve his game, including playing more and more against computers, as most professional players nowadays routinely do. He eventually reached his highest Elo chess rating in 1999, with a score of 2851. The current world record belongs to Magnus Carlsen, who reached an Elo rating of 2882, or 31 points higher than Kasparov, in 2014. This rating difference between Kasparov and Carlsen would translate in practice to an expected score of 54 to 46 in Carlsen’s favor in a hypothetical 100 games match (where wins count as one point and draws count as half a point).

At the same time computer chess has been improving at a much faster pace, and one of the best computer programs today, Stockfish, reached in 2016 an Elo chess rating of 3387. In other words, Stockfish would beat Carlsen hands down 95 to 5 in a 100 games match.

Watch the entire presentation here. Alternatively, continue reading the summary of the presentation below.

Revolutionary World Chess Dominance

However, this is not the AI revolution we want to talk about – this is just machines with better pre-coded strategies, running faster, and analyzing more and deeper moves. The revolution has happened these last few years and a fine example demonstrating this is AlphaZero, a machine learning based system developed by DeepMind, the London based AI research company now part of Alphabet. What is significant and revolutionary about AlphaZero is that it starts with what we call a tabula rasa, meaning that it has no other pre-coded knowledge than the actual rules of the game, and learns to play completely autonomously, by self-play alone. It does not have any heuristic, logic, or game strategy to draw on, no knowledge of previous games, and has no way of interacting with chess experts. Still, after only nine hours of self-play (and admittedly about 44 million games) AlphaZero was able to beat Stockfish hands down with a score of 64 to 36 (winning 28 games, drawing 72 games, and never losing) and thereby reach an estimated Elo rating of over 3500. World chess dominance in nine hours starting from scratch: that is revolutionary!

To further stress the fundamental difference in the level of “intelligence” between Stockfish and AlphaZero, we need only to look at the fact that while Stockfish was evaluating around 70 million positions per second during this match, AlphaZero needed only to evaluate approximately 80 thousand positions per second, and still give a beating to Stockfish. That is nearly a thousand-fold less “brute force”, and is a clear demonstration that AlphaZero can achieve a much better understanding of what chess is about, and does not need to analyze so many possible combinations of move sequences mindlessly.

Similar revolutions are happening in fields such as image synthesis (see for example the amazing recent results obtained with BigGAN models and by NVIDIA), self-driving vehicle technology (see for example the already two years old Tesla Autopilot video), and robotics (see the by now famous Atlas videos from Boston Dynamics).

Drivers of the Revolution

But what is driving this AI revolution? Three main factors are now widely recognized.

The first is data. Artificial intelligence, and specifically machine learning, use data to learn and build different typologies of models, such as predictive models, generative models, etc. The availability of vast amounts of data in today’s digital world allows for the generation of much more robust, reliable, accurate, and sophisticated models than it was possible before. Famous is the quotation from Google’s Research Director Peter Norvig that at Google “We don’t have better algorithms. We just have more data.”

The second driving force is the new paradigm of deep neural networks. Artificial neural networks are not a new technology per se and have been around for a very long time; we used them already more than 20 years ago, but they were at the time much simpler models than we use today. In an artificial neural network, artificial neurons (which typically implement some form of weighted sum gate) are normally organized in layers (mimicking the layered structure of mammal cerebral cortex), and until recently the most common neural network structure involved only three such layers. This simple structure allowed a neural network to learn to perform relatively simple tasks, such as recognizing handwritten digits or making simple function estimations. Today, we can effectively train much larger neural networks, with tens or even more than a hundred layers, that are able to effectively tackle very complex tasks such learning to play chess or drive a car.

To train and run these very complex models, we need very high computing power, which is the third and final factor. Deep neural networks can have millions of neurons and connections that need to be gradually tuned and adapted to very large datasets in order to tackle specific tasks one wishes to solve. The most common computing platform used today is based on graphics processing units, or GPUs. These are mini-supercomputers that can run as fast as the best supercomputers did only ten years ago, such as the NEC Earth Simulator which held the speed record from 2002 to 2004 and was housed in a 65m x 50m two-story building. Originally developed for graphics and gaming, the highly parallel architectures of GPUs have been recently repurposed for AI and have quickly become the reference computing platform for deep neural networks. New AI-specific processors are also being developed today that will bring the computing power and efficiency to even higher levels.

Deep Neural Network Technology for Automated Inspection of Power Grid Assets

The title of this presentation (and blog post) is, however, “Artificial intelligence – a revolution in the air”, and why is that? Some years ago, we had an idea. We asked ourselves: What if we could combine AI with drones? What could we achieve?

This resulted in our Connected Drone project, where using deep neural network technology similar to what you find for example is a self-driving Tesla, we automate the recognition of assets and components on power masts that are relevant for inspections. Furthermore, the same technology is used to develop intelligent functions for fault and anomaly detection and localization. These include making automated observations of obstructions on the power lines, missing components, cracks in wooden poles, pest damage from woodpeckers, rot, conductor damage, rust on steel masts, and more.

All the image analysis is powered by an industrialized solution running on the Microsoft Azure cloud. Working in close cooperation with Microsoft, we have developed an architecture that can automatically scale to process very large amounts of data, video feeds, and images. We ran a number of stress tests where at one time we were provisioning and running more than 300 virtual machines in the cloud and could analyze more than 180 000 images an hour through a full model pipeline of object and fault recognition. This is far more than a human inspector can process in one year!

In April 2018 we decided to take this technology literally in the air. Through cooperation with the Norwegian start-up Versor and their focus on autonomy, we were able to successfully demonstrate to our knowledge the world’s first fully automated inspection of power grid assets, in the form of an autonomous drone – completely stripped of human involvement.

Versor’s drone technology utilizes laser scanning sensors (a 2D LiDAR in this case) to map the environment around it. By understanding its position and the obstacles present in its environment, the drone can plan its flight path and complete defined mission. Adapting our AI technology for powerline asset detection to be able to run onboard the drone (in this case on an NVIDIA TX2 GPU) and combining it with the environment mapping provided by Versor, allowed us to demonstrate a fully autonomous inspection. During the inspection, the drone could use its 3D awareness given by the LiDAR sensor with the visual awareness given by the onboard AI to plan its flight and position itself in the right spots to take high-resolution images from the optimal vantage point for the inspection.

Our journey started in January 2015, when we first launched the Connected Drone project. We have had our hiccups and challenges, but we have also come a very long way – as was demonstrated April 2018 – and we hope that many will see the possibilities this revolution is opening and will choose to join us on the exciting journey ahead. Watch the entire presentation here.

.png?width=250&height=64&name=Grid%20Vision%20logo(250%20x%2064%20px).png)